Many organizations use a Testing Center of Excellence (TCoE) to establish standard processes and procedures, promote best practices, and use common tools to provide high-quality testing services at low cost. I recently had a chance to think about the use of a TCoE in a US federal agency. Specifically, defining an operating model for a TCoE that allows it to evolve over time and support an agency’s emerging testing needs. Here are some thoughts.

First, use a Governance board comprised of stakeholders who represent the customers of the TCoE. The board preserves and strengthens stakeholder confidence in the TCoE via two complementary routes. One, it educates the TCoE about the needs of the customers. For example, it may ask the TCoE vendor to staff test engagements with testers who have prior knowledge of a particular domain. Two, it champions the use of the TCoE within their organization. This is particularly important when there is resistance to the use of TCoE within the agency.

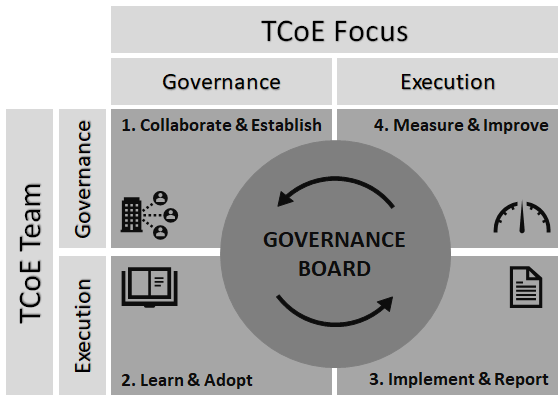

Second, use a small Governance team focused on the long-term governance tasks distinct from the Execution team focused on the day-to-day testing tasks. As shown in the graphic, creating two distinct teams allows proper focus in several important areas:

- The Governance team takes the lead to “collaborate & establish” standard processes and procedures, best practices, and common tools for the TCoE and makes them available in a centralized repository. The collaboration is primarily between the two teams and the stakeholders. If the idea of a TCoE within an agency is new, consider integrating change management principles to ease the transition.

- The Execution team “learns and adopts” the artifacts produced in the previous step with active guidance from the Governance team to ensure they know how to tailor them to the specific needs of their customers.

- The Execution team “implements and reports” the typical life cycle activities in a test engagement such as plan, prepare, execute, report, and closeout using the outputs produced in step 1 and learned and adopted in step 2.

- And finally, the Governance team “measures and improves” TCoE artifacts using results from the TCoE test engagements. Without this step, the critical feedback loop never occurs leading to ossified practices that don’t address the emerging needs of the agency.

In practice, the Governance team, if any, is usually tasked with producing test templates or managing the document repository which does not add much value to the TCoE. Similarly, testers rarely objectively evaluate their own practices as they are busy testing. At one of the agencies, we actively sought feedback from our customers about the test engagements, used a Kanban board for common understanding of everyone’s test engagements, held regular briefings to ensure best practices spread quickly within the team, continually evaluated new tools to support emerging needs, and used a “QA of QA” model to evaluate work products produced by our own testing team to ensure standard processes and procedures were followed. All this without using a separate Governance team.

However, use of a well-planned operating model can help a TCoE overcome some of these challenges in a much more systematic way. And one more thing: how large a Governance team should be is dependent on many factors, but I think spending up to 5% of the TCoE budget on Governance is a reasonable start.

Hopefully, this post gives you an idea or two to help you with your own TCoE. Feel free to leave any comments or questions or write to me directly at anish.sharma at bpsconsulting.com.

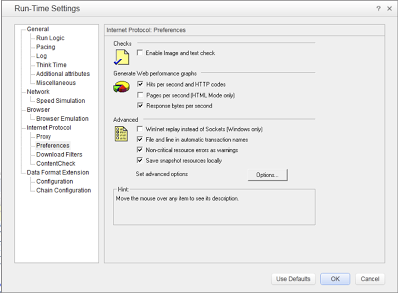

Turning on the WinInet option in run-time settings of VuGen immediately made the output window of VuGen a beehive of activity. The server responded immediately. This option essentially allows LoadRunner to use Internet Explorer’s HTTP implementation under the covers. Using this option, everything that IE can do is made available to LoadRunner. Unfortunately, the use of WinInet option comes with a penalty. According to LoadRunner documentation (v.11.51), this option is not as scalable as the default sockets implementation. HP product support indicated that if using WinInet option allows script replay then that is our only option. Luckily, we found a workaround by staying patient and using

Turning on the WinInet option in run-time settings of VuGen immediately made the output window of VuGen a beehive of activity. The server responded immediately. This option essentially allows LoadRunner to use Internet Explorer’s HTTP implementation under the covers. Using this option, everything that IE can do is made available to LoadRunner. Unfortunately, the use of WinInet option comes with a penalty. According to LoadRunner documentation (v.11.51), this option is not as scalable as the default sockets implementation. HP product support indicated that if using WinInet option allows script replay then that is our only option. Luckily, we found a workaround by staying patient and using